I can relate to the excitement of getting definitive answers, especially when those answers will directly affect your business. Unfortunately, A/B testing is something that depends on waiting until the right moment. So how do you know when that right moment is?

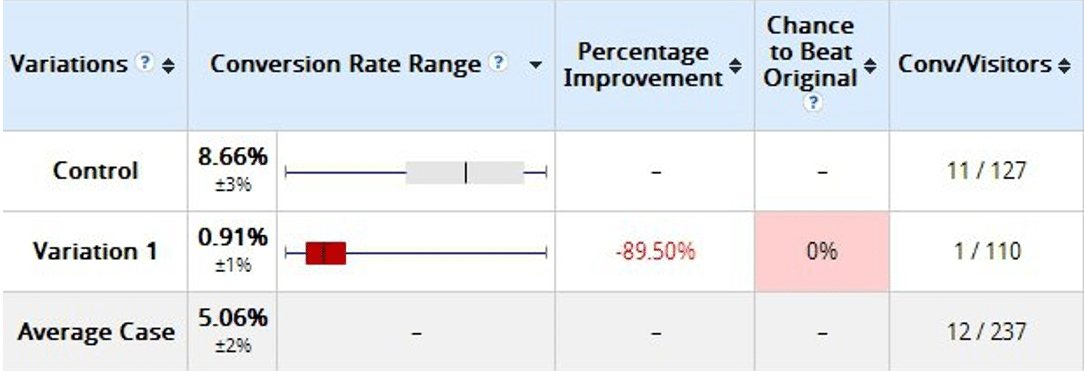

A test stopped too early can yield inaccurate results. These results can range from merely inaccurate to completely erroneous. In an example done by Conversion XL, we are shown a test two days after unveiling:

According to these results, there was a 0% chance that the variation would beat the control.

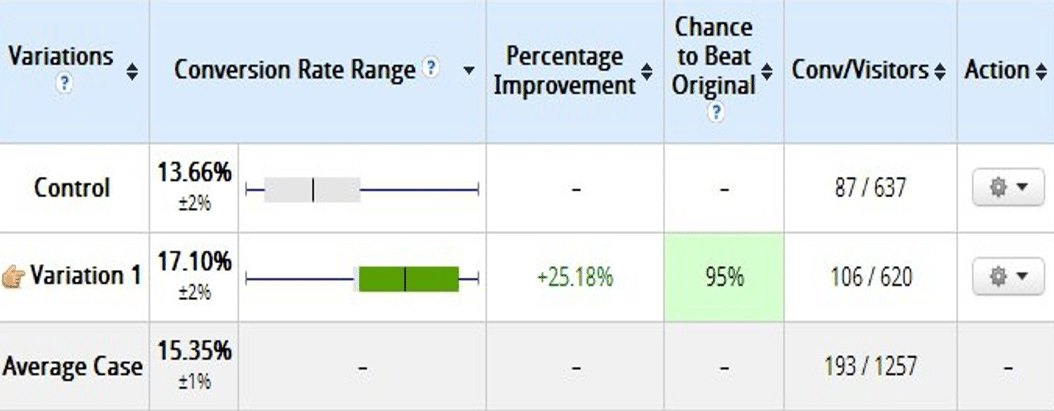

Well, let’s reevaluate after ten days of launch and see if the results have changed:

Now the results are showing that the variation has a 95% chance of beating the control. What a difference eight days can make!

Okay, now we know the damages of stopping the test too early, but how do we really know when it’s just right? No doubt about it, this is a tough call, but there are a few rules to go by with A/B testing, according to Neil Patel in The Definitive Guide to Conversion Optimization:

- It should run for at least 7 days to ensure validity.

- The test should be conducted until there is a 95% chance of beating the original variable.

However, there is no precise formula for running the perfect A/B test. Context is key when it comes to A/B testing. If the traffic being drawn to your page is higher, it is more likely you will have a shorter time span of the trial than a website that’s bringing a smaller amount of views a day. The larger the audience for your site, the faster relevant results will be delivered.